Protocols Instead Of Platforms: Rethinking Reddit, Twitter, Moderation And Free Speech

from the protocols-not-platforms dept

Right. By now you've heard about Reddit's new content moderation policy, which (in short) is basically that it will continue to ban illegal stuff, and then work hard to make "unpleasant" stuff harder to find. There is an awful lot of devil in very few details, mainly around the rather vague "I know it when I see it" standards being applied. So far, I've seen two kinds of general reactions, neither of which really make that much sense to me. You have the free speech absolutists who (incorrectly) think that a right to free speech should mean a right to bother others with their free speech. They're upset about any kind of moderation at all (though, apparently at least some are relieved that racist content won't be hidden entirely). On the flip side, there's lots and lots and lots of moralizing about how Reddit should just outright ban "bad" content.I think both points of view are a little simplistic. It's easy to say that you "know" bad content when you see it, but then you end up in crazy lawsuits like the one we just discussed up in Canada, where deciding what's good and what's bad seems to be very, very subjective.

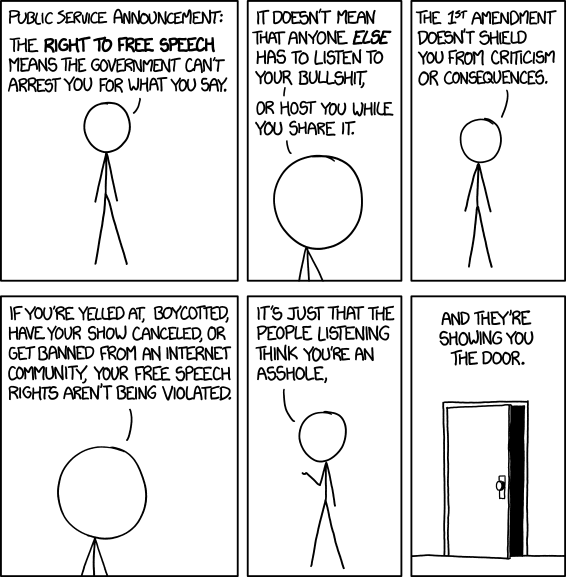

I'm a big supporter of free speech, period. No "but." I also worry about what it means for freedom of expression when everyone has to rely on intermediaries to "allow" that expression to occur. At the same time, I recognize that platforms have their own free speech rights to moderate what content appears on that platform. And also that having no moderation at all often leads to platforms being overrun and becoming useless -- starting with spam and, if a platform gets large enough, trollish behavior or other immature behavior that drives away more intelligent and inspired debate. This is different than arguing that certain content shouldn't be spoken or shouldn't be allowed to be spoken -- it's just that maybe it does not belong in a particular community. Obligatory xkcd:

However, in thinking about all of this (and the similar struggles that Twitter, in particular, has been having), I've been wondering if perhaps the problem is when we put the burden of "protecting free speech" on platforms, when that's not the best role for those platforms. The various platforms serve a variety of different purposes, all of which seem to get conflated into one larger purpose. They are places to post content (express), for one, but also a place to connect as well as a place for discoverability of the content.

And if we're serious about protecting free expression, perhaps those things should be separated. Here's a thought experiment that is only half baked (and I'm hoping many of you help continue the baking in the comments below). What if, instead of being full stack platforms for all of those things, they were split into a protocol for the expression, which was open and distributed, and then the company could continue to play the other roles of connecting and helping with discoverability. This isn't necessarily an entirely crazy idea. Ryan Charles, who worked at Reddit for a period of time, notes that he was hired to build such a thing, and is apparently trying to do so again outside of the company. And plenty of people have discussed building a distributed Twitter for years.

But here's the big question. In such a scenario is there still room for Reddit or Twitter the company, if they no longer host the content themselves? I'd argue yes and, in fact, that it could strengthen the business models for both, though while opening them up to more competition (which would be a challenge).

Think of it this way: if they were designed as protocols, where you could publish the content wherever you want -- including on platforms that you, yourself, control, then people would be free to speak their mind as they see fit using these tools. And that's great. But, then, the companies would just act as more centralized sources to curate and connect -- and it could be done in different ways by different companies. Think of it like HTTP and Google. Via HTTP anyone can publish whatever they want on the web, and Google then acts to make it findable via search.

In this world that we talk about, anyone could publish links or content via an Open Reddit Post Protocol (ORPP) or Open Tweet Protocol (OTP) and that includes the ability to push that content to the Corporate Reddit or the Corporate Twitter (or any other competitors that spring up). And then the platform companies can decide how they want to handle things. If they want a nice pure and clean Reddit where only good stuff and happy discussions occur, they can create that. Those who want angry political debates can set up their own platform that will accept that kind of content. In short, the content can still be expressed, but individuals effectively get to choose whose filtering and discoverability system they prefer. If a site becomes too aggressive, or not aggressive enough, then people can migrate as necessary.

This isn't necessarily a perfect solution by any means. And I'm sure it raises lots of other problems and challenges. And the companies doing the filtering and the discoverability will still face all sorts of questions about how they want to make those choices. Are they looking to pretend that ignorant angry people don't exist in the world? Or are they looking to provide forums to teach angry ignorant people not to be so angry and ignorant? Or do they want to be a forum just for angry ignorant people that the rest of the internet would prefer to, as xkcd notes, show the door.

And, of course, this would eventually lead to more questions about intermediary liability. Already we see these fights where people blame Google for the content that Google finds, even when it's hosted on other sites. If this sort of model really took off and there were really successful companies handling the filtering/discoverability portions, it's not hard to predict lawsuits arguing that it should be illegal for companies to link to certain content. But that's a different kind of battle.

Either way, this seems like a potential scenario that doesn't end up with one of the two extremes of either "all content must be allowed on these platforms even if it's being overrun by trolls and spam" or "we only let nice people talk around here." Because neither is a world that is particularly fun to think about.

Filed Under: communities, free speech, moderation, platforms, protocols

Companies: reddit, twitter