from the provide-tools dept

BuzzFeed had a long and interesting article earlier this week noting Twitter's ongoing

difficulty in figuring out an appropriate way to deal with harassment and abuse that is often heaped upon certain users -- especially women and minorities. The article is interesting -- even as Twitter

disputes some of its claims. It's also noteworthy that this debate is not even remotely new. Last year, I wrote about it, suggesting that one possible solution is to switch Twitter from being a

platform into

being a protocol -- on which anyone could then build services. In that world, Twitter could then offer various filters if it wanted -- while other providers could compete with different filters or services. Then the tweets could flow without Twitter having to take responsibility, but there would be options (possibly many options) for those who were dealing with abuse or harassment.

Not surprisingly, that kind of suggestion is unlikely to ever be adopted, but reading through the BuzzFeed article, something else struck me. To some extent, the article seemed a bit unfair in portraying some of Twitter's execs as willfully clueless about the abuse and harassment. It repeatedly portrays those who support freedom of expression as somehow being unreasonable extremists. Here's one example:

Weeks later, when a rash of beheading videos appeared, Costolo gave similar takedown orders, causing Twitter’s free speech advocates, Gabriel Stricker and Vijaya Gadde, to call an emergency policy meeting.

Inside the meeting, attended by Costolo, Stricker, Gadde, and product head Kevin Weil (now Instagram’s product lead) and first reported by BuzzFeed News, tensions rose as Costolo’s desire to build a more palatable network that was marketable and ultimately attractive to new users clashed with Stricker and Gadde’s desire for radically free expression.

“You really think we should have videos of people being murdered?” someone who attended the meeting recalls Costolo arguing, while Stricker reportedly compared Costolo’s takedown of undesirable content to deleting the Zapruder film after objections from the Kennedy family. Ultimately, the meeting ended with the group deciding to carve out policy exceptions to keep up grisly content for newsworthiness, according to one person present. Though Stricker and Gadde won, one source described a frustrated Costolo leaving in disagreement. “I think if you guys have your way the only people using Twitter will be ISIS and the ACLU,” Costolo said, according to this person.

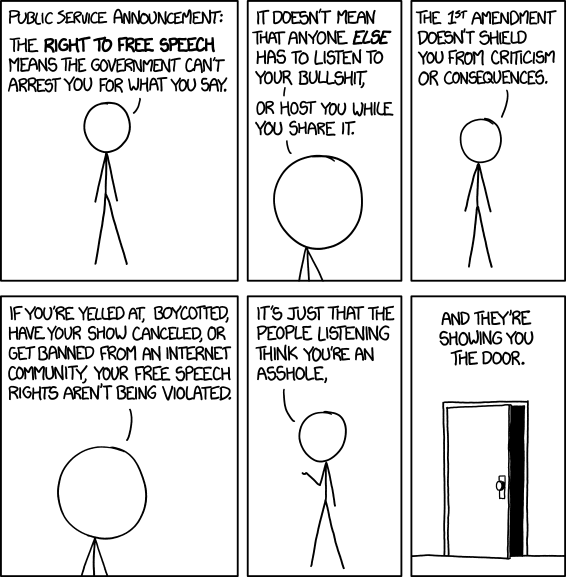

But I think part of the issue is that people are confusing the nature of free speech, a la the famed

xkcd on the issue:

However, rather than the way most people take this xkcd to mean that it's right for sites to kick off people, I'd argue that it's something that

Twitter itself should be thinking about. To date, much of its plans to deal with abuse

seem to be focused on kicking people off the site who abuse the site's terms of service. This has created a few flare ups here and there of people who feel this is improper or unfair -- and that the process is arbitrary. But some of that may stem from the fact that people at Twitter are just as confused about the point of the xkcd above as many of its users are.

That is, I think the "free speech wing" of Twitter is

absolutely correct that the site should bend over backwards to support the right

and ability of people to say pretty much whatever they want to say. But what

they don't need to do is force others to listen. That is Twitter should be focused, heavily, on building much, much, much, much more flexible and robust tools for users to

curate their own experience. If they want to let in everything, they should be able to do so. If they want to want to block certain

types of users, they should be able to do so. If they want to block based on keywords, they should be able to do so.

To date, Twitter has mostly offered fairly crude and mostly ineffective tools for users who are trying to deal with harassment. There is the ability to report abuse, but that leads to all sorts of problems and arbitrary decisions about who is violating the terms of service and who is not. The other two tools are the ability to "block" certain users and to "mute" others. There's a subtle difference here: if you block someone, they can discover that (and that leads to its own set of problems). If you "mute" them, they can still read your tweets, you just won't see theirs. People have created "blocklists," but again, these tend to be pretty crude and ineffective.

Giving end users not just a full suite of tools to figure out how they get to curate their own experience -- combined with the ability to share the "recipes" one creates -- could actually be super powerful. So, for example, say I don't want to view tweets from users who have had accounts for less than 6 months (a lot of abuse comes from new users) or who haven't actually uploaded an account profile image (so called "eggs"). Let me create that as an option -- and then share that "filter" or "recipe" for others to use. So, someone could create a filter/recipe that only shows notifications from users who have more than 1,000 followers and who have tweeted at least twice per week over the last year. Or, maybe a filter that automatically blocks anyone a particular politician has retweeted. The possibilities go on and on.

To some extent, this opens something of an opportunity to go back to the way that Twitter felt in the early days. Somewhat hilariously (in retrospect), in the early days, some claimed that one of the reasons why Twitter was so awesome was that

there was "no spam or trolls" because you self-selected everyone you followed. It was a pure curation system. But that was only really true of the earliest incarnation of Twitter, before it incorporated replies, notifications and retweets. With those three things, your own curation skills only accounted for part of what you could be exposed to on Twitter. There became lots of ways for third parties to insert themselves into the conversation. And, to some extent, this is actually

really great. I've met some fantastic people and learned a lot thanks to Twitter's ability to connect people. But it also opened the door to trolls and harassment and Twitter's just had so much difficulty figuring out what to do about it.

I get that there are two very large (and almost diametrically opposed) camps of people on Twitter who think that Twitter should either

do nothing (Camp 1) ... or that they should be kicking a lot more people off the service (Camp 2). I think neither of those camps is being reasonable. For the first camp, ignoring the fact that harassment and spam and other stuff happens is silly. If you have a 100% open system, it gets abused, period. It's a mess. But camp 2 underestimates the subjective nature of what "harassment" is and the importance of being able to make use of a platform like Twitter. In other contexts, we've seen how arbitrary policies have resulted in questionable removals from sites, and that creates some serious problems.

Recognizing that Twitter is unlikely to ever move to my original solution, of offering a protocol rather than a platform, it seems that giving the power to

each user to better curate and filter their own experience seems like a much more workable idea. In fact, it could help bring about the early Twitter experience, when users really did curate their entire experience. And, contrary to the concerns some supposedly expressed and which were repeated in the BuzzFeed article, it would seem to create a situation that might

increase user adoption of the platform, rather than decrease it.

Filed Under: abuse, blocklists, filters, harassment, moderation, platforms, protocols

Companies: twitter